The setup

Dan Meyer, a (former?) math teacher with some extraordinary ideas, has a nifty concept for teaching expected values:

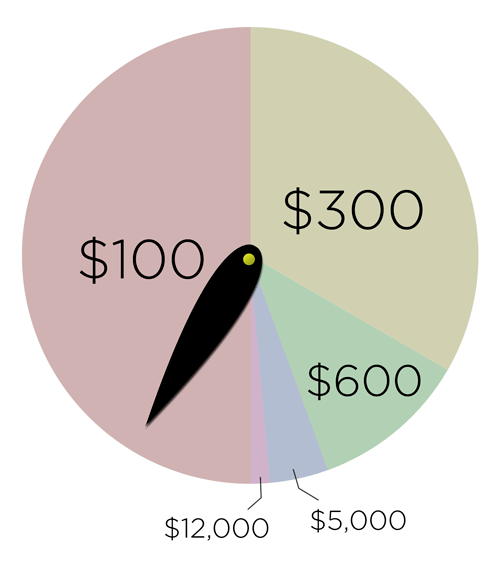

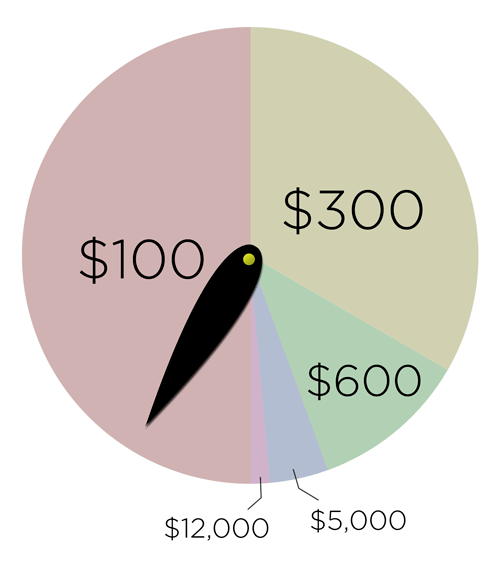

“So one month before our formal discussion of expected value, I’d print out this image, tack a spinner to it, and ask every student to fix a bet on one region for the entire month. I’d seal my own bet in an envelope.

I’d ask a new student to spin it every day for a month. We’d tally up the cash at the end of the month as the introduction to our discussion of expected value.

So let them have their superstition. Let them take a wild bet on $12,000. How on Earth did the math teacher know the best bet in advance?”

I absolutely love the idea of warming up their brains to this idea a month before you actually teach it, and getting them “hooked” by placing a bet and watching it play out over time.

The Challenge

But there’s a problem: at least as presented, the intended lesson isn’t quite true. I’m taking it as a challenge to see if we can fix it without killing the wow-factor. Let’s try.

As I read it, the intended lesson here is: “if you’re playing the same betting game repeatedly, it’s good to bet on the option with the highest expected value.”

And the intended wow-factor comes from: “none of the options looked like an obvious winner to me, but my teacher knew which one would win!”

But the lesson just isn’t true with this spinner and time-frame: here, the highest-expected-value choice is actually NOT the one most likely to have earned the most money after only 20 or 30 spins.

And the wow-factor is not guaranteed: none of the choices is much more likely to win than the others in only 20-30 spins, so the teacher can’t know the winning bet in advance. It’s like you’re a magician doing a card trick that only works a third of the time. You can still have a good discussion about the math, but it’s just not as cool.

I’d like to re-design the spinner so that the lesson is true, and the wow-factor still happens, after only a month of spins.

WAit, is there really a problem?

First, what’s wrong with the spinner? By my eyeball, the expected values per spin are $100/2 = $50; $300/3 = $100; $600/9 = $67ish; $5000/27 = $185ish; and $12000/54 = $222ish. So in the LONG run, if you spin this spinner a million times, the “$12000” has the highest expected value and is almost surely the best bet. No question.

But in Dan’s suspense-building setup, you only spin once a day for a month, for a total of 20ish spins (since weekends are out). With only 20 spins, the results are too unpredictable with the given spinner — none of the five choices is especially likely to be the winner.

How do we know? Instead of thinking “the action is spinning the spinner once, and we’re going to do this action twenty times,” let’s look at it another way: “the action is spinning the spinner twenty times in a row, and we’re going to do this action once.” That’s what really matters to the classroom teacher running this exercise: you get one shot to confidently place my bet at the start of the month; after a single month of daily spins, will the kids be wowed by seeing that you placed the right bet?

I ran a simulation in R (though sometime I’d like to tackle this analytically too):

Take 20 random draws from a multinomial distribution with the same probabilities as Dan’s spinner.

Multiply the results by the values of each bet.

> nr.spins <- 20

> spins=rmultinom(1,size=nr.spins,prob=c(1/2,1/3,1/9,1/27,1/54))

> spins

[,1]

[1,] 11

[2,] 7

[3,] 2

[4,] 0

[5,] 0

> winnings=spins*c(100,300,600,5000,12000)

> winnings

[,1]

[1,] 1100

[2,] 2100

[3,] 1200

[4,] 0

[5,] 0

For example, in this case we happened not to hit the “$5000” or the “$12000” at all. But we hit “$100” 11 times, “$300” 7 times, and “$600” twice, so someone who bet on “$300” would have won the most money that month.

Now, this was just for one month. Try it again for another month:

> spins

[,1]

[1,] 8

[2,] 9

[3,] 1

[4,] 2

[5,] 0

> winnings

[,1]

[1,] 800

[2,] 2700

[3,] 600

[4,] 10000

[5,] 0

This time we got “$5000” twice and whoever bet on that would have been the winner.

Okay, there’s clearly some variability as to who wins when you draw a new set of 20 spins. We want to know how variable this is.

So let’s do this many times — like a million times — and each time you do it, see which bet won that month. Keep track of how often each bet wins (and ties too, why not).

nr.sims=1000000

bestpick <- rep(0,5)

tiedpick <- rep(0,5)

nr.spins <- 20

for(i in 1:nr.sims){

spins=rmultinom(1,size=nr.spins,prob=c(1/2,1/3,1/9,1/27,1/54))

winnings=spins*c(100,300,600,5000,12000)

best <- which(winnings==max(winnings))

if(length(best)==1){

bestpick[best] <- bestpick[best]+1

} else{

tiedpick[best] <- tiedpick[best]+1

}

}

Results are as follows. The first number under bestpick is the rough proportion of times that “$100” would win; the last number is the rough proportion of times that “$12000” would win. Similarly for proportion of ties under tiedpick, except that I haven’t corrected for double-counting (since ties are rare enough not to affect our conclusions).

> bestpick/nr.sims

[1] 0.0145 0.2124 0.0712 0.3780 0.3029

> tiedpick/nr.sims

[1] 0.00199 0.02093 0.01893 0.00000 0.0000

(Ties, and the fact it’s just a simulation, mean these probabilities aren’t exactly right… but they’re within a few percentage points of their long-run value.)

It turns out that the fourth choice, “$5000”, wins a little under 40% of the time. The highest-expected-value choice, “$12000”, only wins about 30% of the time. And “$300” turns out to be the winning bet about 20% of the time.

Unless I’ve made a mistake somewhere, this shows that using Dan’s spinner for one spin a day, 20 days in a row, (1) the most likely winner is not the choice with the highest expected value, and (2) the teacher can’t know which choice will be the winner — it’s too uncertain. So the lesson is wrong, and you can’t guarantee the wow-factor. That’s a shame.

dang. What to do, then?

Well, you can try spinning it more than once a day. What if you spin it 10 times a day, for a total of 200 spins? If we re-run the simulation above using nr.spins <- 200 here’s what we get:

> bestpick/nr.sims

[1] 0.000000 0.012258 0.000287 0.393095 0.589246

> tiedpick/nr.sims

[1] 0.000000 0.000332 0.000037 0.004780 0.005079

So it’s better, in that “$12000” really is the best choice… but it still has only about a 60% chance of winning. I’d prefer something closer to 90% for the sake of the wow-factor.

What if you have each kid spin it 10 times each day? Say 20 kids in the class, times 10 spins per kid, times 20 days, so 4000 spins by the month’s end:

> bestpick/nr.sims

[1] 0.000 0.000 0.000 0.106 0.892

> tiedpick/nr.sims

[1] 0.00000 0.00000 0.00000 0.00157 0.00157

That’s much better. But that’s a lot of spins to do by hand, and to keep track of…

Of course you could run a simulation on your computer, but I assume that’s nowhere near as convincing to the students.

What I’d really like to see is a spinner that gives more consistent results, so that you can be pretty sure after only 20 or 30 spins it’ll usually give the same winner. A simple example would be a spinner with only these 3 options: 1/2 chance of $100, 1/3 chance of $300, and 1/6 chance of $400.

> bestpick/nr.sims

[1] 0.0574 0.6977 0.2371

> tiedpick/nr.sims

[1] 0.00200 0.00783 0.00596

That’s okay, but there’s still only about a 70% chance of the highest-expected-value (“$300” here) being the winner after 20 spins… and anyway it’s much easier to guess “correctly” here, no math required, so it’s not as impressive if the teacher does guess right.

Hmmm. Gotta think a bit harder about whether it’s possible to construct a spinner that’s both (1) predictable and (2) non-obvious, given only 20 or so spins. Let me know if you have any thoughts.

Edit: I propose a better solution in the next post.